The Raspberry Pi HQ camera and the Arducam circuit board

The Raspberry Pi High Quality (HQ) camera is a high-resolution camera intended for the Raspberry Pi. It interfaces with the Raspberry Pi SBC via a ribbon cable. Part of the work of the camera is done by the GPU on the SOC.

We have tested the PI HQ camera previously to look at its suitability as an astronomical imaging device.

Firstly, on December 26th 2020, using a Raspberry Pi 4B with a Python capture program I had written. Very promising results were obtained, including some 8-bit, 10s exposures of the Orion nebula, as well as some lunar data. The quality of the images obtained was quite good and they showed very few compression artefacts. The blog article can be read at

https://x-bit-astro-imaging.blogspot.com/2020/12/evaluation-raspberry-pi-high-quality.html

Secondly, experiments were done on January 22nd 2021, to control the Pi HQ camera using AstroDMx Capture for the Raspberry Pi, to capture lunar data.

https://x-bit-astro-imaging.blogspot.com/2021/01/raspberry-pi-hq-camera-work-in-progress.html

The camera was working normally with the Raspberry Pi where the camera constantly streams data to the GPU. The maximum resolution we were able to use was 1600 x 1200. The lunar data were acceptable, but it was evident that there were some very slight compression artefacts.

The tests on these previous occasions were using the Pi HQ camera in its normal configuration attached via a ribbon cable to the camera port on the Raspberry PI SBC.

The current test involved the fitting of an Arducam circuit board which connects via a ribbon cable to the Pi HQ camera. Using plastic standoffs, the Arducam adaptor sits as a second layer above the main Pi HQ circuit board.

Click on an image to get a closer view

The Arducam board mounted on the back of the pi HQ camera board

It is a shame that such a short cable and such an inflexible cable was supplied with the the Arducam board.

The specifications of the Arducam board are dissapointing, and suggest that little thought has gone into the purpose of the Raspberry Pi SBC or the Pi HQ camera.

The Raspberry Pi SBC is intended as a device for innovation, learning and application in many fields. The take-up of the device in areas other than education is testimony to its versatility.

The Raspberry Pi HQ camera is supposed to be exactly that! A High Quality camera, not just a high resolution camera! The purpose of the Arducam bord is to convert the Pi HQ camera into a USB device that can be used with computers other than the Raspberry Pi; and it does this, but at a cost!

The Arducam board allows a variety of resolutions to be used over USB 2.0 including the highest resolution of 4032 x 3040 down to 640 x 480.

It is noteworthy that for the highest four resolutions, the specifications quote the frame-rates that can be acheived:

4032 x 3040 10fps

3840 x 2160 20fps

2592 x 1440 30fps

1920 x 1080 60fps

The latter resolution is Full HD 1080p

This, in combination with the fact that the Arduboard only offers Motion JPEG video compression shows that the priority for the manufacturer was to provide fast frame-rates (regardless of the quality of the frames being streamed).

In fact, what has been done is to convert the Pi HQ camera into a webcam (but what an expensive webcam). No consideration has been taken for the variety of applications that the camera could potentially be used for. No consideration for the fact that some applications require high quality images and possibly long exposures, or even 12 bit output and not necessarily high frame-rates!

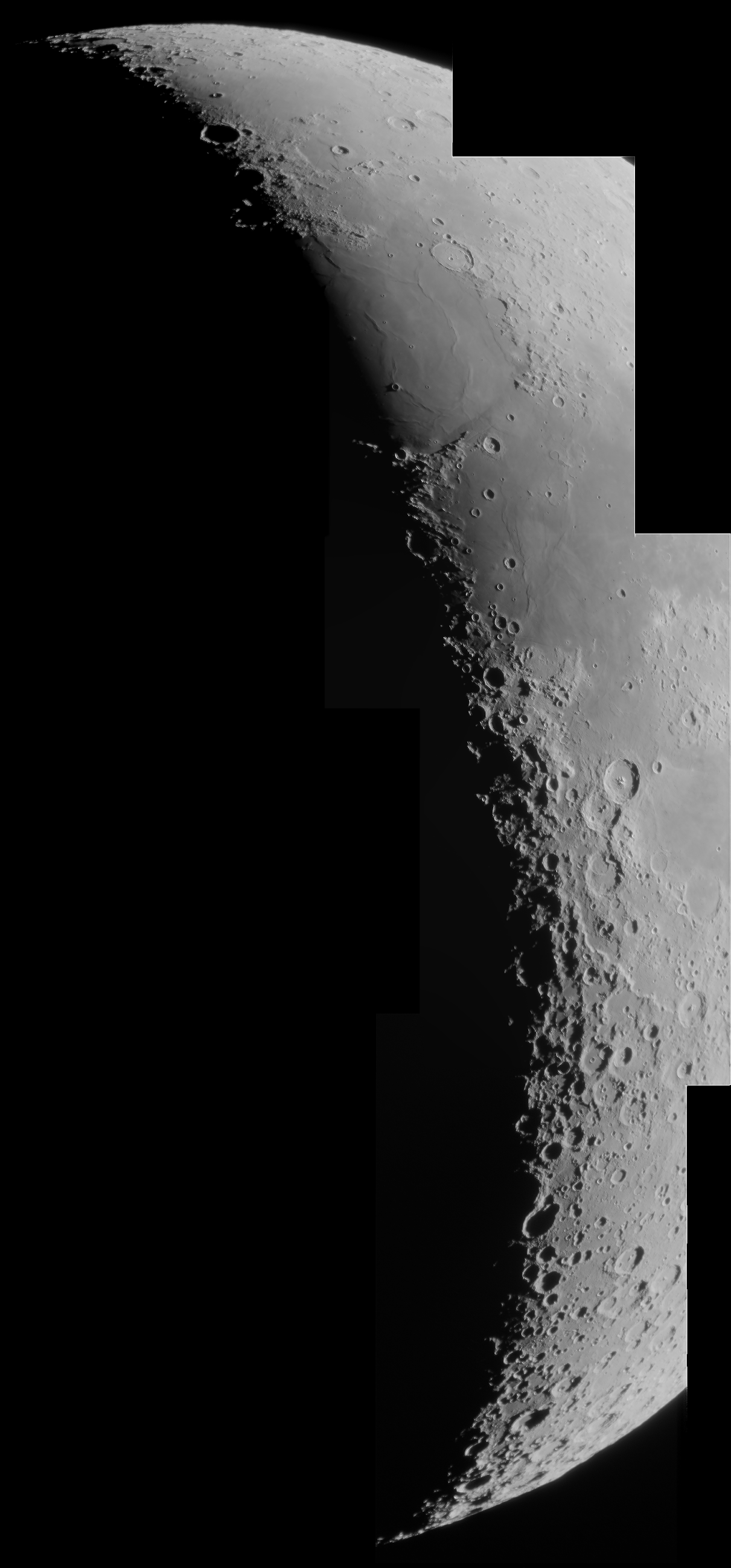

Testing the Arducam board plus the Pi HQ camera as a lunar imaging device.

The Arducam/Pi HQ camera was fitted with a Mogg adaptor and placed at the focus of a Bresser Messier-AR-102-xs/460 ED, f/4.5 refractor, on a Celestron AVX mount. AstroDMx Capture for Windows was used to capture a 1000 frame SER file of the 82.1% waxing, gibbous Moon at the maximum resolution of 4032 x 3040.

The Arducam/Pi HQ camera mounted on the scope

Screenshot of AstroDMx Capture for Windows capturing the lunar SER file

The camera offers lots of controls including gain, exposure, gamma, contrast and a number of others. At this stage things look reasonable.

The best 75% of the frames in the SER file were stacked in Autostakkert!, wavelet processed in Registax 6 and post processed in the Gimp 2.10.

Final image of the lunar disk

The final image still looks reasonable, but the devil is, as always, in the detail!

When viewed at full resolution, the image can be seen to be highly compressed! Virtually all of the fine detail on the lunar surface has disappeared in the JPEG compression. The result is a very unnatural looking lunar image devoid of the subtle finer details.

The highly compressed image

There is lots of fine detail in this image that is obliterated by the high compression in the PiHQ/Arducam image.

This image can be compared with an image of the Clavius region of the Moon taken with an SV305, a camera that does not compress the video stream.

As it stands, the Arducam board does not convert the Pi HQ camera into a USB camera that is of any value for astronomical imaging. When used alone with the Raspberry Pi SBC as it is intended to be used, the Pi HQ camera is a promising astronomical imaging device.

What would be required for the Arducam board to be useful with the Pi HQ camera as an astronomical imaging device?

- Uncompressed YUYV, whatever the effects on frame-rate.

- Availablility of RAW, undebayered data.

- Region of Interest resolutions.

- Long exposures.

- 12-bit data output.

Until such time as these things become a reality, it will be necessary to confine the use of the Pi HQ camera to operation with the Raspberry Pi computer in the way that it was designed, and to attempt to extract data from the camera in as high a quality as possible. Sadly, documentation on using the camera is scant and it will be up to individuals to explore the camera as an astronomical imaging device.

If the application was different, for example some sort of surveillance or wildlife observation camera, where a large moving image is required, then the Arducam in conjunction with the Pi HQ camera would provide a solution.